Medical research has had an enormously positive impact on the quality of healthcare. It is estimated that advancements in medicine have increased our life expectancy by as many as 13 years since the 1900s. In more recent times, the rise of evidence-based medicine has led to improved clinical practice through meta-analyses, systematic reviews and the formation of clinical guidelines. Then why is there all this talk about medical research facing a ‘replication crisis?’

In the past few years, there has been a growing controversy surrounding the validity of a number of cornerstone medical research papers. For example, Amgen, a US biotech company, attempted to replicate 53 high-impact cancer research studies and were reportedly able to replicate only six. Similarly, researchers from Bayer, a German pharmaceutical company, reported that they were only able to replicate 24 out of 67 studies. Moreover, John Ioannidis, MD, Professor of Medicine and Statistics at Stanford University—a strong voice in the replication debate—showed that of 45 of the most influential clinical studies, only 44% were successfully replicated.

Whether clinical medicine is really facing a crisis is a matter of discussion, but a fierce debate is sometimes useful to accelerate innovation. In this article, we discuss six factors that contribute to issues of replication in medical research and six initiatives that aim to counter them.

Replication Crisis Factor #1: Statistical significance tolerance is too low

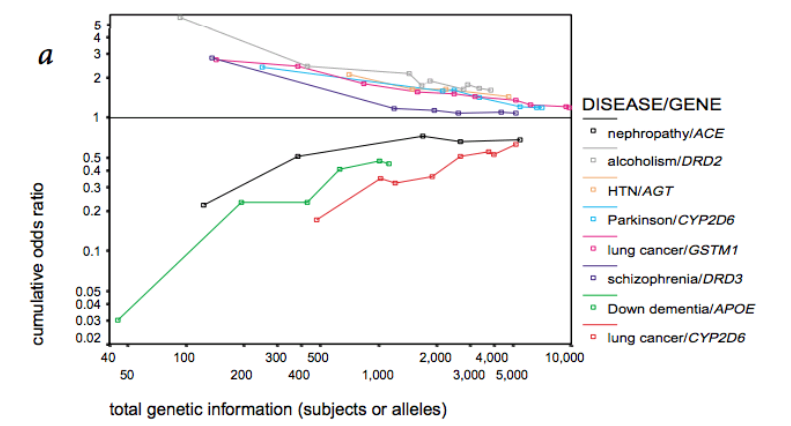

In medical research, the unwritten rule is that a statistical significance of 5% is acceptable. At the same time, 35,000 new publications on RCTs were published in 2017 alone. This means that, based on simple statistics, a substantial number of publications will have been a false positive. Moreover, because running clinical trials is expensive, the majority of studies are actually small and underpowered. And the smaller the sample, the larger the measured effects are likely to be. This means that many published studies are likely to report inflated effects. This is clearly illustrated by the figure below.

(Source: Ioannidis (METRICS), Nature Genetics, 2001)

Initiative:

The Stanford METRICS Institute is a research institute that studies how to make biomedical research more reliable. They make policy recommendations based on their research. For example, METRICS proposed to increase statistical significance levels from 0.05 to 0.005 (!) in order to reduce the number of false positives in medical research. Do you dare to take this as a confidence interval for your study?

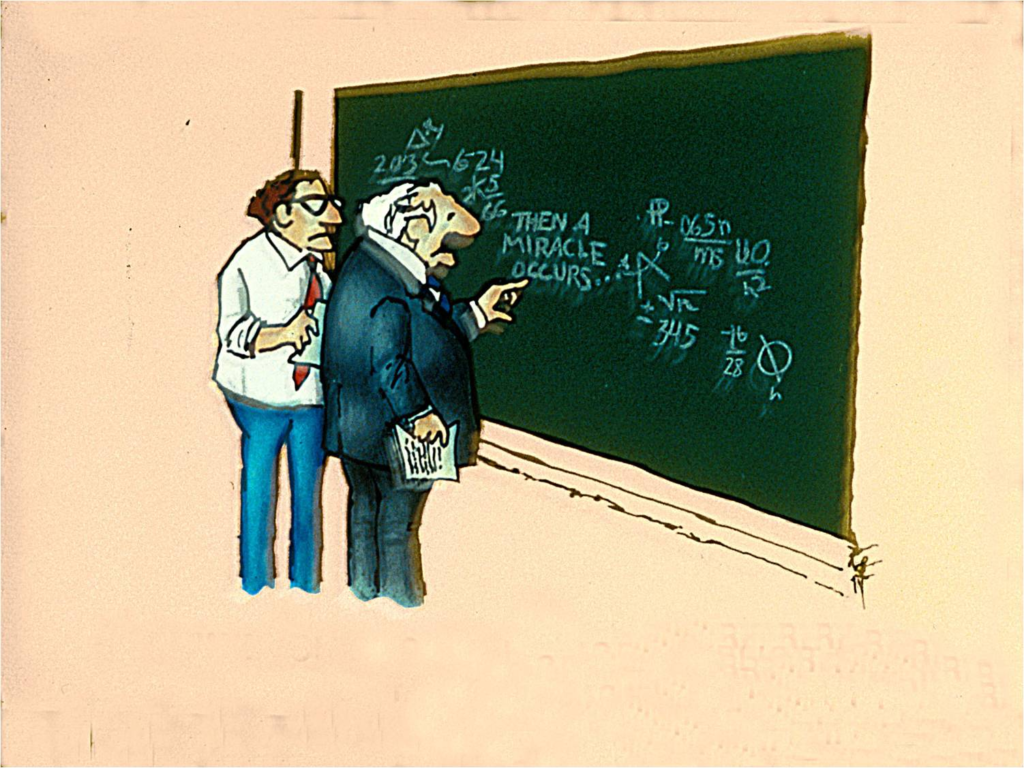

Replication Crisis Factor #2: Our incentives make us biased

Fierce competition, strong incentives to publish, and commercial interest have inadvertently lead to both conscious and unconscious bias in the scientific literature. And, the higher the vested interest in a field, the stronger the bias is likely to be. For example, a study of meta-analyses on anti-depressants found that meta-analyses sponsored by industry were 22 times less likely to report caveats than those performed independently. Unfortunately, academic publications are not much better. From a random sample of 1,000 Medline abstracts, 96% reported significant p-values. This clearly shows the academic system’s thirst for publishing positive results.

Source: Github.io

Initiative:

The Lancet’s REWARD campaign promotes good research practices in biomedical research, such as publishing study protocol and properly disclosing a conflict of interest. Your institute can support this initiative!

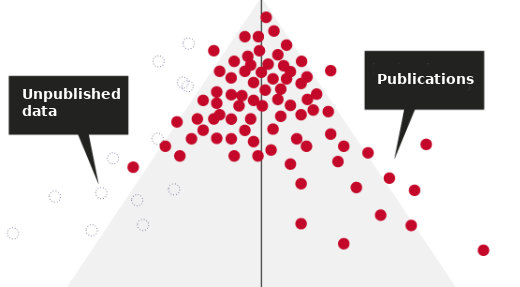

Replication Crisis Factor #3: ’Negative’ results’ are never published

It is estimated that 50% of clinical studies performed are never published. The culprit lies both in the incentive structure of the academic system and with the pharmaceutical industry. For one, researchers are incentivized to publish results that are as exciting as possible. Non-significant findings are not likely to help you get ahead. Secondly, very few journals accept papers with ‘null’ results, which makes such papers difficult to publish. In addition to the academic system, pharmaceutical companies have little incentive to submit a paper with evidence that their drug did not work. They would rather keep this to themselves. So, ‘failures’ remain largely unpublished.

Fig. Where have all the ‘failures’ gone? Adapted from Nature.

Initiative:

Positively Negative is a collection on PLOS ONE focused exclusively on publishing negative, null and inconclusive results. Submit your ‘unsuccessful’ studies here!

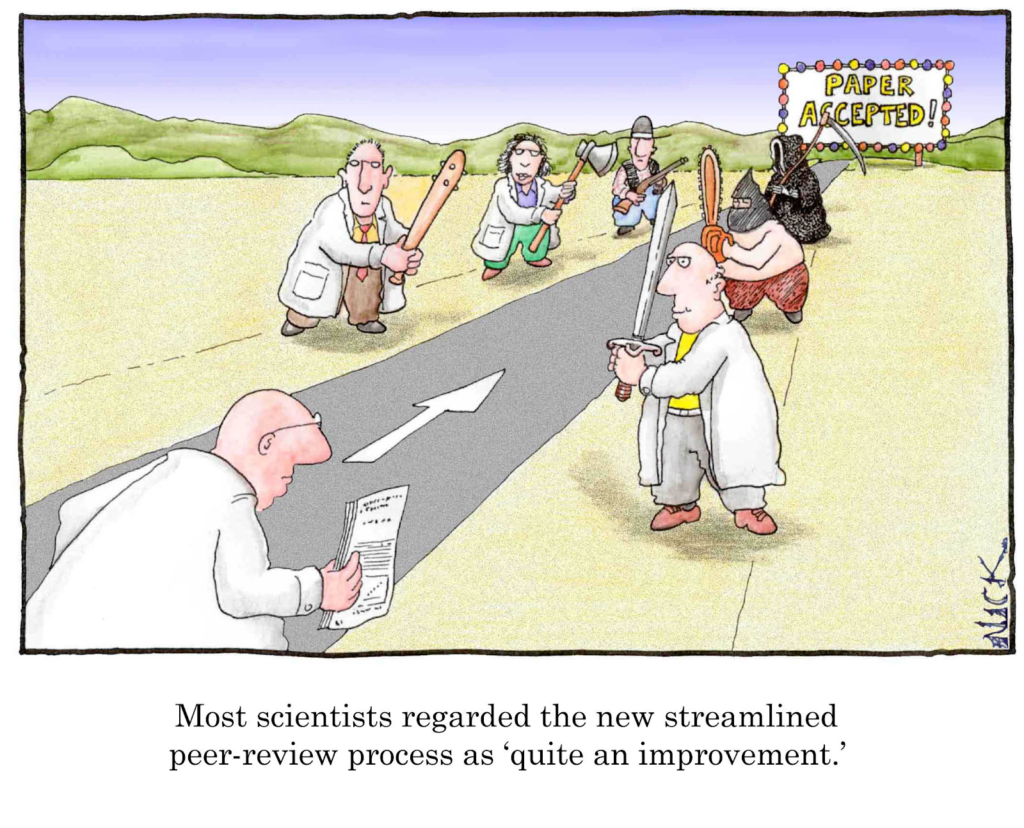

Replication Crisis Factor #4: The peer review system is broken

The peer review process is meant to examine papers on potential shortcomings. But, with the increase in published papers, the need for peer reviewers has skyrocketed. Few career incentives exist for researchers to participate in peer reviews. Because of this, it has become increasingly difficult for scientific journals to find competent reviewers. It is therefore not uncommon for a paper to be reviewed by only one or two reviewers. The time spent on the review is often limited and for this reason, it is not uncommon for reviewers to miss crucial shortcomings.

Initiative:

Several high brow papers such as Nature have experimented with an open peer review process, where papers were published online for public review. Other journals have suggested moving peer review to an earlier phase. Instead of the final paper, peers would review protocols and methods prior to data collection. Papers would then be accepted regardless of their outcome.

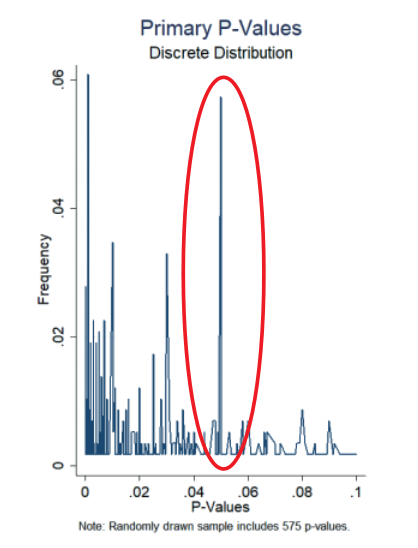

Replication Crisis Factor #5: Fraud and ‘p-hacking’

Unfortunately, cases of fraud in clinical research still make the news from time to time. One of the most notorious cases is the 2001 clinical study on antidepressant paroxetine. The company reported that the drug was safe for treating teenagers, but as it emerged, unpublished studies by the same company had also shown that the drug increased the risk of suicidal behaviour. A more subtle form of fraud is p-hacking. P-hacking is the manipulation of data or analysis methods so that the required significance level is reached. Researchers from Magdenburg showed that from a sample of 1,177 clinical trials, 7% reported a primary endpoint p-value of literally just under 0.05, a clear indication of p-hacking.

Figure: Evidence of p-hacking in clinical trials Magdeburg

Initiative:

The EQUATOR network is an initiative that publishes reporting guidelines to tackle inadequate reporting and improve the reliability of medical research. Before starting your clinical study, read the CONSORT statement and check for relevant guidelines on the EQUATOR website.

Replication Crisis Factor #6: Little incentive to disclose data and methods

Finally, there is currently little incentive for researchers to share data sets, protocols or analysis methods. This makes reproducing analyses impossible without re-doing the clinical study, but this is costly and wasteful. Although most high-impact journals have policies in place for disclosing data, these rules are rarely enforced. Today, very few researchers willingly share their primary data set.

Initiative:

The FAIR initiative is committed to making research data Findable, Accessible, Interoperable and Reusable. Proponents of FAIR stress that opening up data allows it to be re-analyzed and re-used in further studies, which tremendously increases the value of the data. Castor EDC is committed to scalable FAIR data

Conclusion

Whether or not medical science really is facing a replication crisis, the debate focuses our attention on how to improve the quality of our research processes. Now is as good a time as any for institutes, funders and researchers to review their methods and learn how we can improve the replicability and quality of research. Castor EDC plans to dedicate a series of articles to better understand the replication crisis and how we can make medical research more efficient. So don’t forget to subscribe!